Hi!

We've promised more tutorials, and we've specifically promised a tutorial on dynamic facial normal maps in UE4. So here it is! Before we begin...

It took a long time to get started with these, because we were busy with other works, our own projects, and with learning a LOT about the engine. So for now, we didn't really progress with the actual project of Lemniscate, but this recently acquired knowledge will speed us up when we do have time to progress.

Now, on to the tutorial.

***

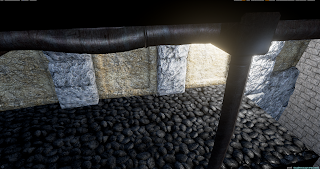

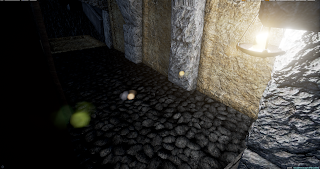

To start out, you must have a finished face model with

bone-driven morph targets. You'll have to decide which morphs should activate normal map changes. For example, Sal here has normal maps for raising each individual eyebrow, for frowning, for raising the inner portion of his eyebrows, and for opening his mouth. For a human, there could be additional wrinkles on the nose, under the eyes and such. Because Sal has fur, these normal maps are pretty subtle.

We will probably use a different technology for him, since we use NeoFur now for the fur effect, so this is only for demonstration.

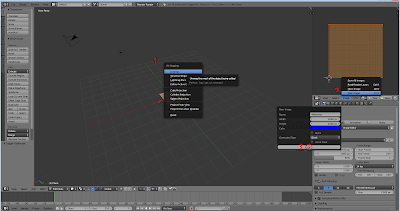

We are using

Blender and GIMP in this tutorial, but you can use any modeling and photo editing software you like.

But Blender and GIMP are free, so no excuses!

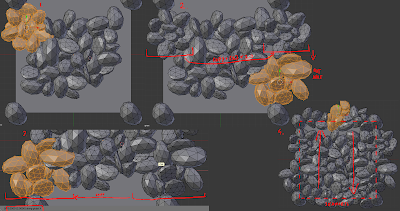

First, you'll have to remove every part of the skeletal mesh that is irrelevant. The only parts to stay should be the parts with the material that will make use of the dynamic normal map (so, in this case, the face).

Duplicate the head. Make one for every morph target you want to influence the normals, and then apply the chosen morphs to the heads, one morph to one mesh.

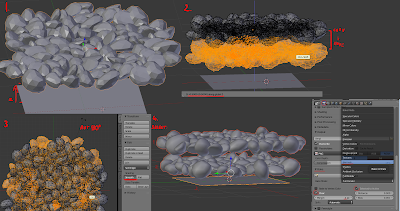

Duplicate all of the heads again, make a new version that removes absolutely everything other than the parts where you want the normals to change (so the neck, back of the head, ears have to go). Then apply a multires modifier and set it to about x4, so that you can make details on the face with the sculpt tool.

Don't worry about anything else other than the wrinkles, because this normal map will be added to the default normal, so all the base normal map details will still be visible. It's better if you don't make overlapping wrinkles, because all of these will be combined into a single normal map later.

After you're done with sculpting, bake normal maps from each of the sculpted faces onto the original meshes (you can find our tutorial on normal map baking with Blender

here, the only thing different here is using multires). Optionally you can also bake ambient occlusion maps for more illusion of depth, which we didn't, but you can operate them the same way as the normals.

After you're done baking all of them, combine them into a single file (this is the part where you can make adjustments if you have overlapping wrinkles!)

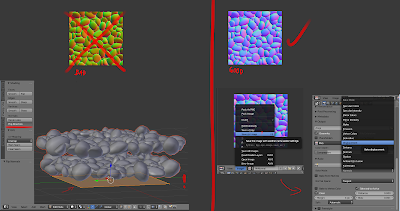

Next, you'll have to make masks for using this additional normal map. It's best if you use the image file's channels for separating different masks. Because a mask only uses greyscale values, you can combine up to 4 of them into a single image file using the red, green, blue and alpha channels. The ideal format to use for this is the Targa format (.tga), because it keeps the channels clean and separate. You can use GIMP or Photoshop to edit the channels individually, but you can probably use any other photo-manipulation software for this as well.

I've created six different wrinkles, so I will save them as two .tga files using the r, g, b channels. Use a black background. When editing the channels, you'll have to use white to fill the spaces where the individual wrinkled spots are.

These will indicate the areas where you want to add your new wrinkle normal map to the default one. After finishing, combine the channels into colored .tga files and save.

It's time to open up Unreal 4.

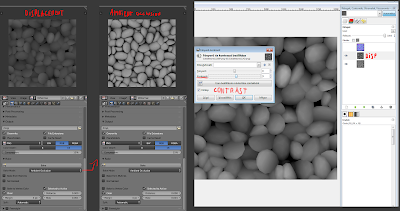

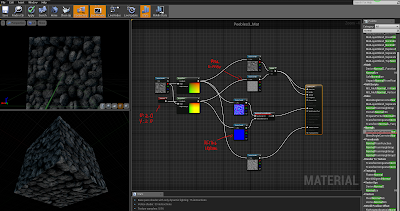

Import the new normal map and the masks, and put them inside the character's material. Arrange the mask textures below each other and add a multiply node next to each of their channels. Connect scalar parameters to the other input of the multiply nodes and name them appropriately - for example, the parameter next to the mask controlling the wrinkles above the left eyebrow will be called BrowUp_L. Name all of them, and make sure not to change their default values from 0.

Create LinearInterpolation nodes (Lerp nodes) for all of the masks, and chain them together using their A inputs. Create a three value texture sample node (hold down 3 and click with the left mouse button) and set the third value (blue) to 1 (so, 0,0,1) or just use an empty normal map. Connect this into the first Lerp node's A input.

Connect the multiply nodes to the Lerp nodes' alpha inputs, and our new normal map into the Lerp nodes' B inputs. The last Lerp node's output should go into a BlendAngleCorrectedNormals node's AdditionalNormal input, and the original normal map should be connected to the BaseNormal input. Connect the last output to the final material input node's Normal input.

You are done with the material editor, let's get started with preparing the skeleton!

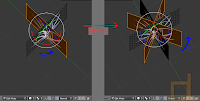

These are bone driven morph targets. Currently there isn't a node for getting the value of a morph target in Blueprints (the 'get morph target' node doesn't return any other value other than 0, correct me if I'm wrong about this). So what we have to do is create sockets for each bone that drives a morph target that we want to add dynamic normal maps to. Then you should rotate the morph targets to such a position that you can easily remember and calculate with - you should choose a rotation axis (in my case, X) that matches with the rotation of the bone that drives the morph.

So, in an ideal case, when the morph target's value is 1, the bone's local X rotation is 90 degrees, and the socket's should read the same. When the value is -1, the rotation should read -90 degrees. This is important, because if the degrees are, for example, 90 for neutral, 0 for -1 and 180 for 1, then the 180 degrees will cause problems. In Unreal, if the degree would go above 180, it becomes -180, which is a nightmare when working with clamps. So, ideally just stick to -90, 0 and 90 degrees.

You can see the socket's local rotations in the Skeleton view if you select them while an animation is playing. You can also output them in a print node and check while playing, or right click on them in a blueprint, 'watch' them and the values will appear on the blueprint while the game is playing.

Let's move on to the animation blueprint that drives all of this!

|

| It's pretty big, so you may have to download it to see it properly |

You may get all of it by just looking at the picture, but I'll explain everything below.

You need to add this to the character's anim blueprint's event graph's Update Animation event. I've added a bool that can turn off the whole thing if it's not needed. Then, the blueprint makes sure that the variable holding the material of the face isn't empty; if it is, the blueprint will create a dynamic material out of the appropriate material and use that. (The dynamic part is important,

parameters can only be updated in dynamic materials, and so far I've only managed to create them during runtime. So this part will happen when the anim blueprint starts updating.)

Then comes the math. I'll show you the easiest example: you want to figure out when to activate the normal map for wrinkling the forehead above the left brow. You'll have to

get the appropriate morph target's socket's rotation first (make sure that you get the rotation

in component space - the rotation compared to the original rotations of the socket, not in world space, because that rotation is compared to the world's rotation. You can get component space by using the Socket Transform node instead of the simple rotation node). In our case, when the morph target's value is 1, the socket's rotation is 90.

The material parameter's values are: 0 for invisible, 1 for completely visible, everything in between is partly visible, visibility depending on the number. For this reason, we have to assign 90 degrees to the number 1, and 0 degrees to the number 0. The easiest way to do this is using the

'normalize to range' node. This node lets you type in a minimum and maximum value, and outputs the position of the input number on this scale as a number from 0 to 1 (for example, on a range of 0 to 100, 87 would be 0,87).

After we got this number, we just have to set it as the scalar material parameter controlling the normal map's opacity, and we're done. It's a good idea to

clamp the values of the socket rotations, because animations - obviously - are not clamped to a 90 degrees of movement, there can be slip-ups. (This is where the jump from 180 to -180 would make things hard: -180 is well below 0 or even -90, so it would instantly make the value go from 1 to 0, thus make the normalmap invisible, which is something that we don't want.)

A slightly more complicated version is if both the positive and negative morph target values are bound to normal maps. You'll have to do the first part the same way, but you have to get the absolute value of the rotation degrees of the socket (negative numbers become positive, positive numbers stay positive), and then normalize to range. The rest is the same.

The only other variation we're using is multiple morph targets triggering the same normal map. If you want some of the morphs to affect the normal map's opacity a little less then others, you'll have to multiply the output amount by some less-than-1 number, like 0.8. At the end, you'll have to

add all the affecting morph's values together, then

clamp the resulting number between 0 and 1. That's all there is to it, really.

For testing, I suggest you create a morph target test animation where you operate all of the morphs individually and in order. You'll be able to clearly see if all of the morphs are working. For me, there was a lot of trial and error involved, but you could minimize this by clearly noting and setting all the socket's rotations.